As a writer, you’ve probably crafted cracking copy for your audience without really thinking about it. You know what works because you know what great writing is—and what it isn’t. It’s innate. With UX writing, the value isn’t actually in your words, it’s in the value those words offer users and the business. Using content tests and data to prove that value should be your north star as a UX writer, as UX writing sits at the intersection of data and creativity.

Getting that data in a way that offers insight rather than noise can be a challenge. Here are five tests you can run to help you develop rich data and curate insights you can use to make your words better for everybody.

A/B testing absolutely every variable is how Netflix made itself the world-class product experience it is today. Every UI change, including copy, underwent some sort of A/B testing to make sure that the changes being made were the right ones.

Simply put, A/B testing is the testing of a variable against a control to see which one comes out on top on a given metric. For a call to action (CTA) button, this would be clickthrough rate (or how many people are clicking the button).

You can show half your users one variable while you show the other half the control (your product team can help you set this up) to see what impact your changes may actually have.

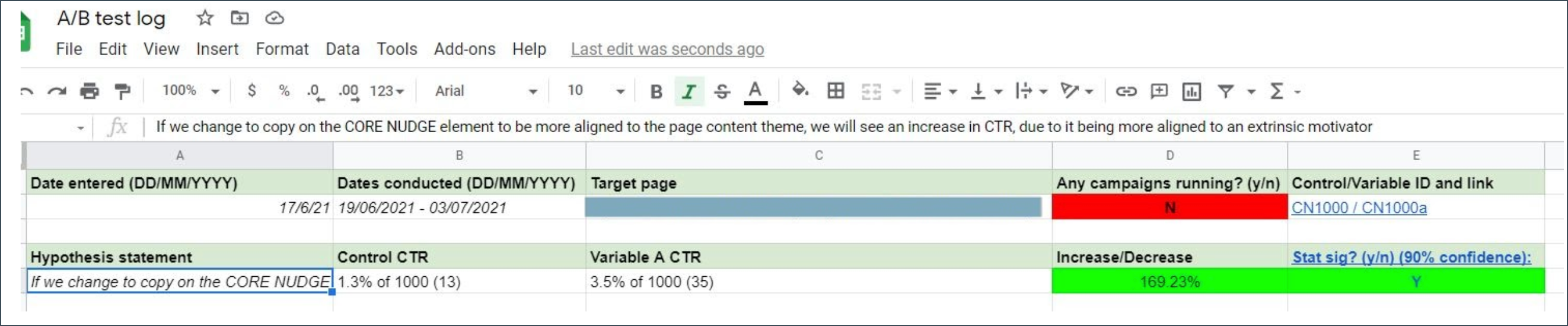

What an A/B test log may look like—as long as it’s organized, you’ll get value out of it

It’s super important that you keep a log of the tests you’re running, the tests you’ve already run, and the ones you want to run so you don’t end up doubling up. You don’t need to do anything too fancy for this—you can just put it in a shared spreadsheet.

Finally, make sure you assess the results for statistical significance to make sure that there is a measurable impact, or whether the numbers you’re seeing are a mirage. SurveyMonkey has a great tool for working this out.

How do you make sure your words are easily understood by the largest number of users? Readability isn’t just a matter of figuring out whether your copy is assisting users to complete their task, it’s also a matter of accessibility.

Assigning your copy a Flesch-Kincaid score is one of the more tried-and-true methods of assessing readability by judging individual word syllables and sentence length. As a rule of thumb, you should aim for a score of 8, but this may dial up or down depending on your audience. You can take a deep-dive into Flesch-Kinkaid here.

There are other tools you can use, however. For example, while undertaking a Teaching English as a Second Language course, I bumped into VocabKitchen.

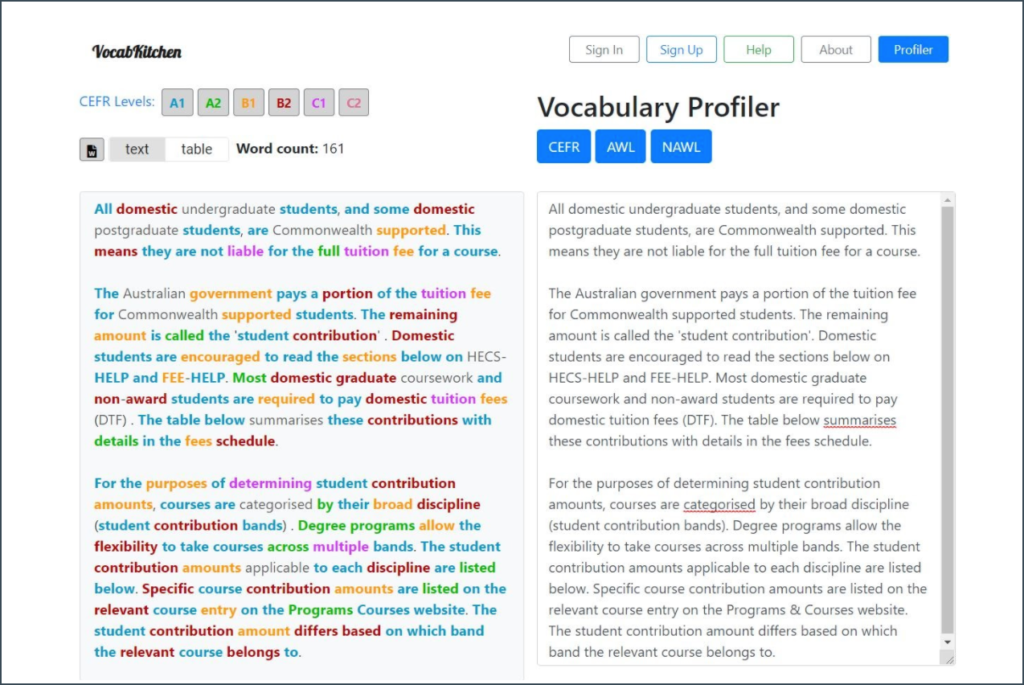

Tools like VocabKitchen can help identify terms which may be difficult for international audiences

It’s generally used to assess a text for suitability for various grade levels, but it will help you identify any difficult terms for non-native speakers of English. This is particularly useful if you have an international audience, but you’re not quite ready for full localization yet.

This isn’t so much a technical test as a stress test for your copy, especially if you’re working with a B2B product. Quite often, subject matter experts will know your users better than you, as they spend all day every day talking to them. For example, some of the SMEs you will work with are sales representatives.

They’re on the front lines with your customers, talking to them about their worries, stresses, and tasks to do—all while trying to sell them products—which is great insight for any content design work you may need to do.

They’ll be up on industry specific jargon, so they’ll be a great judge on whether the language you’re using is too complicated, or not specific enough. This is also where challenges to your copy will come up, and this is a great thing. If you have the data to back your copy choices, then you’ve done your job as a UX writer.

It’s also a great way to bring important stakeholders along for the journey as you develop content—but remember that you’re there to draw out insight that will help your users, rather than as a tick-box exercise.

This is where you can really gather some great qualitative data and see how your users react to your copy. Your role as a UX writer is to assist users complete key tasks as seamlessly as possible, and observing your users try to complete tasks is really the only way to do that.

Sit with your users (in-person or remotely) and ask them to complete key tasks such as ‘add X item to your shopping cart’. While they do so, ask them to talk out loud about what they are doing and how they are finding the experience so far while you record key quotes.

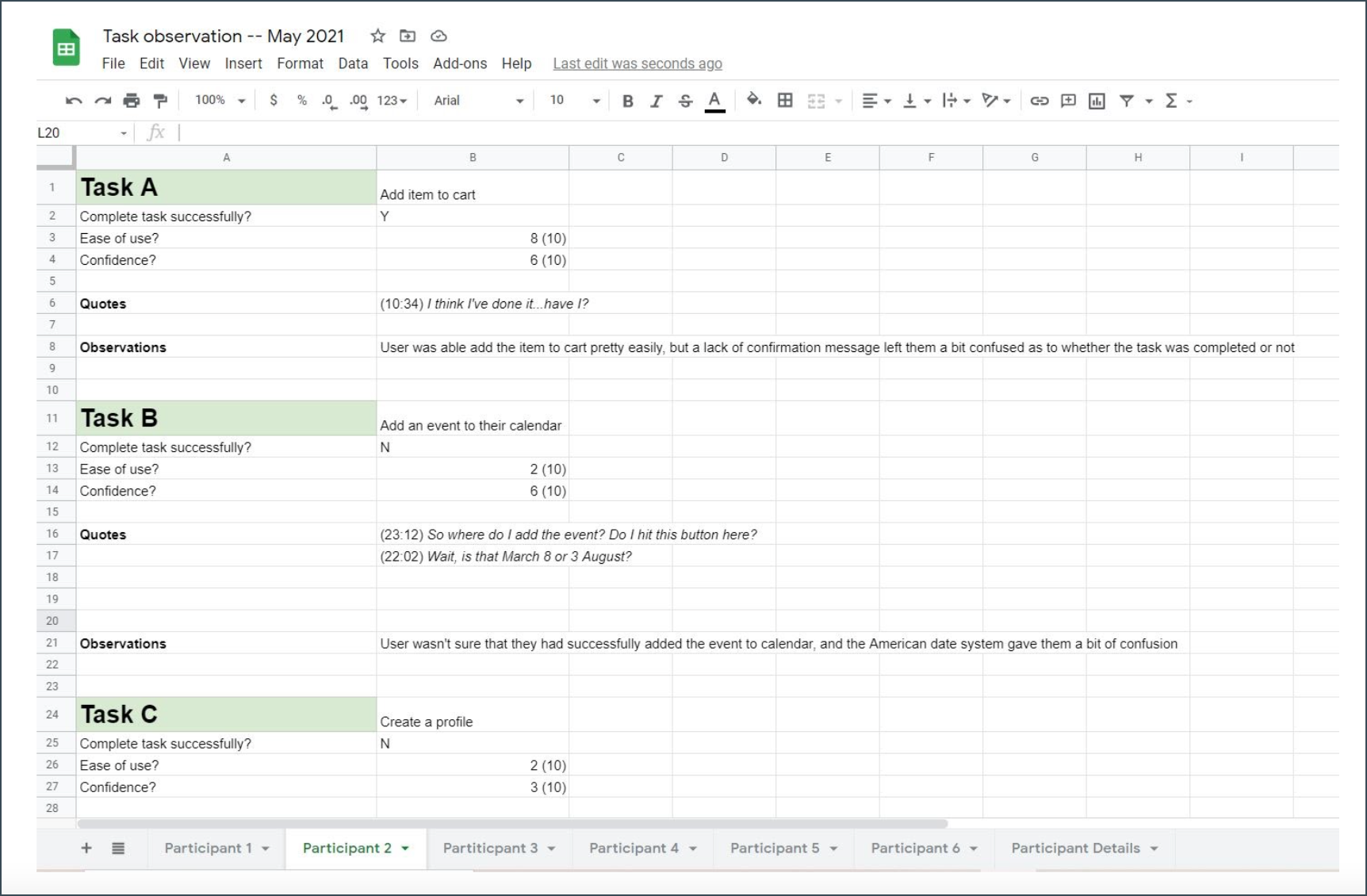

Sample task completion recording log—make sure you take down key quotes and timestamps so you can go back to the recording later

If you see them looking between two buttons and not sure which one to press, ask them what they’re thinking about. If they reply that they’re a bit confused, ask them what’s causing the confusion. It’s in this rich qualitative data that you’ll start to be able to answer the why of design choices, rather than the what that quantitative analysis offers.

If you’re all about information architecture and taxonomy, you’ll become intimately familiar with tree tests and card sorts. Tree testing is similar to task completion testing, in that you present the users your content categories and subcategories and then ask them where they would find specific information. If your category labeling is doing its job, they should be able to do it fairly easily.

TreeJack is a good platform for conducting tree tests, while you can do a deep dive on tree testing over at Norman Nielsen.

Card sorting is all about understanding the way users organize hierarchical information in a way that makes sense to them.

You give users a series of topics and labels and ask them to sort them into groups (these may be product groupings for an eCommerce site, for example). They can either label the groupings they’ve made (an open card sort) or sort them into predefined categories (a closed card sort).

Testing and obtaining qualitative and quantitative data is what actually makes you a UX writer. It may be hard to admit, but anybody can write copy, CTAs, error and empty state messages—but a UX writer brings data to the party to inform choices.

Working with data and being able to back up your work with numbers is a great way to increase job security, sure, but way more importantly, you’ll be doing a disservice to your audiences if you just wing it. Making task completion easier is at the heart of what good UX writers do, and if you’re not using data to inform the words you write, you’re only getting half the story.

James McGrath is a UX Writer, CX Specialist, and sometimes journalist who lives in Melbourne, Australia. Connect with him on LinkedIn.

Get our weekly Dash newsletter packed with links, regular updates with resources, discounts, and more.